OpenClaw Agentic AI Platform

Security Research

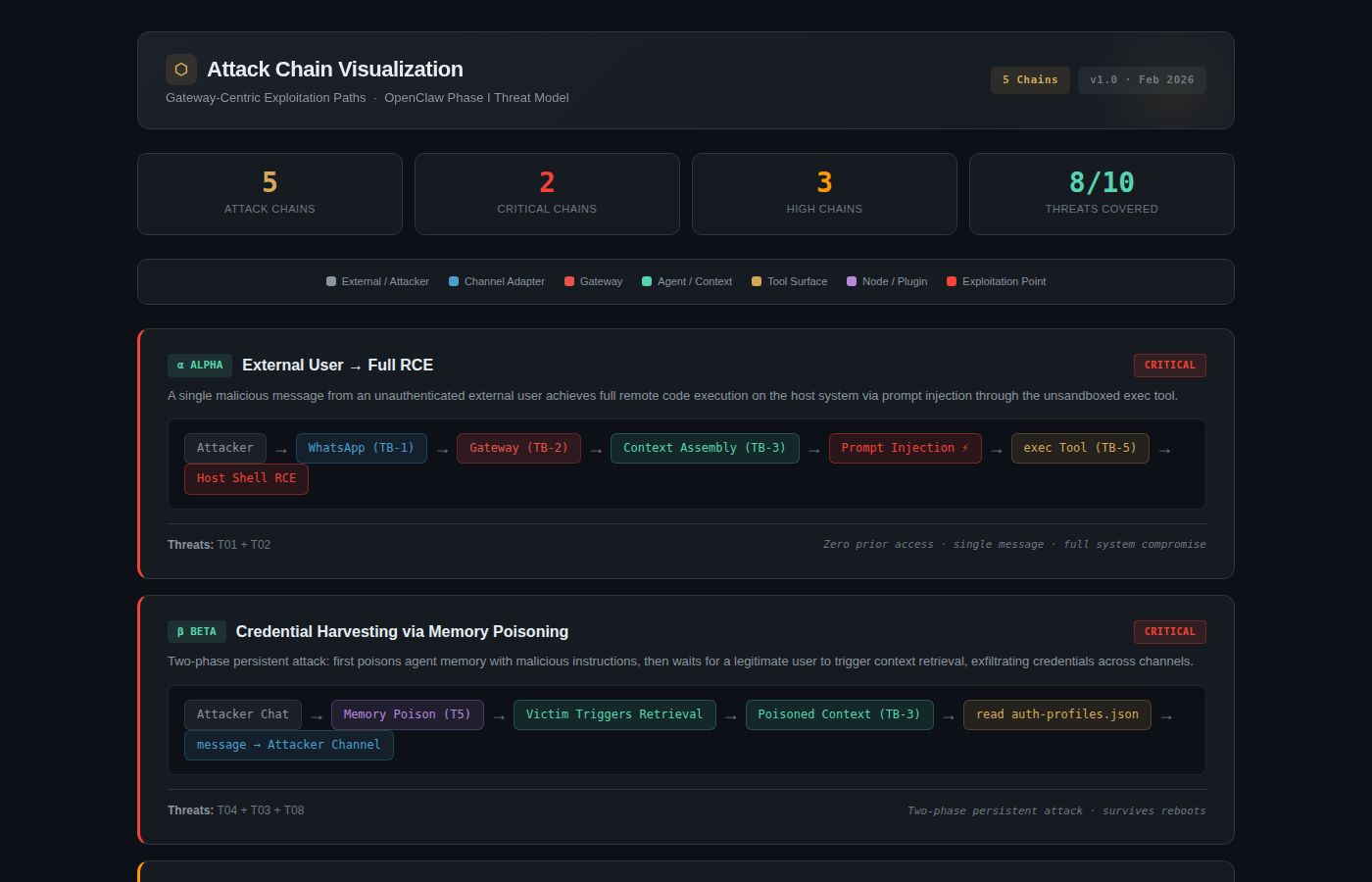

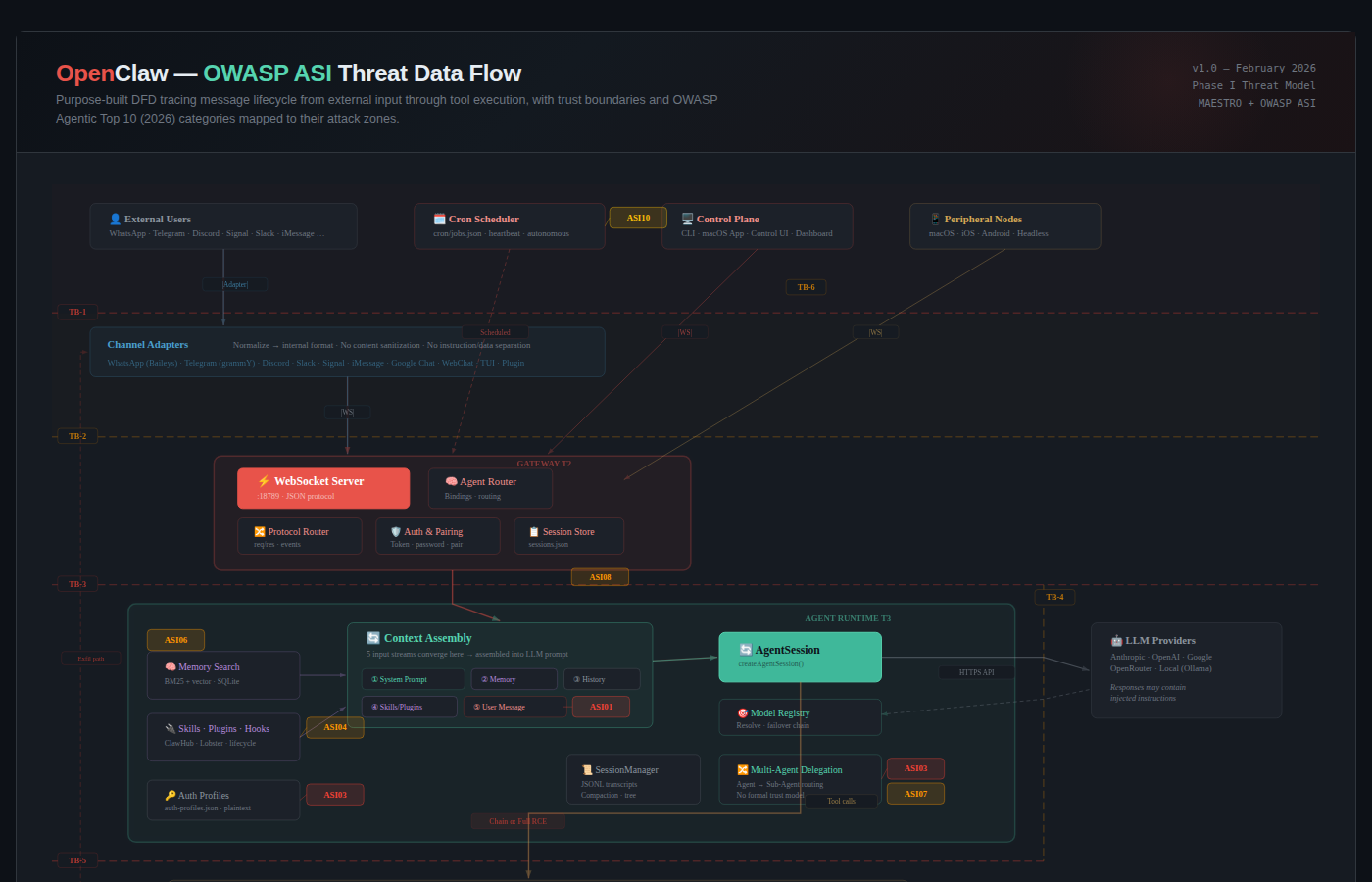

An independent security research initiative applying dual-framework analysis — MAESTRO (CSA) combined with the OWASP Agentic Security Initiative (ASI) Top 10 (2026) — to a real-world agentic AI platform.

About This Research

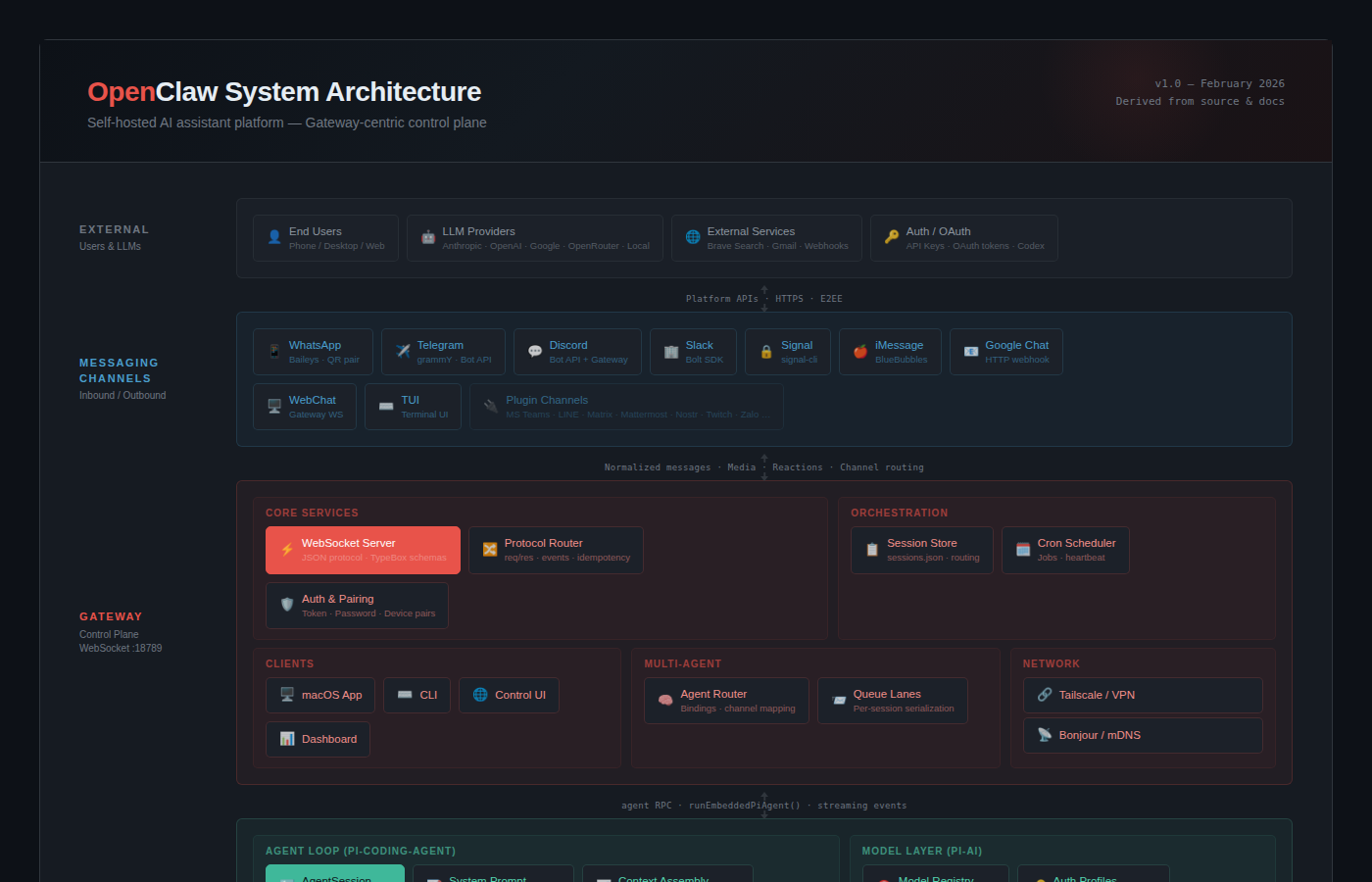

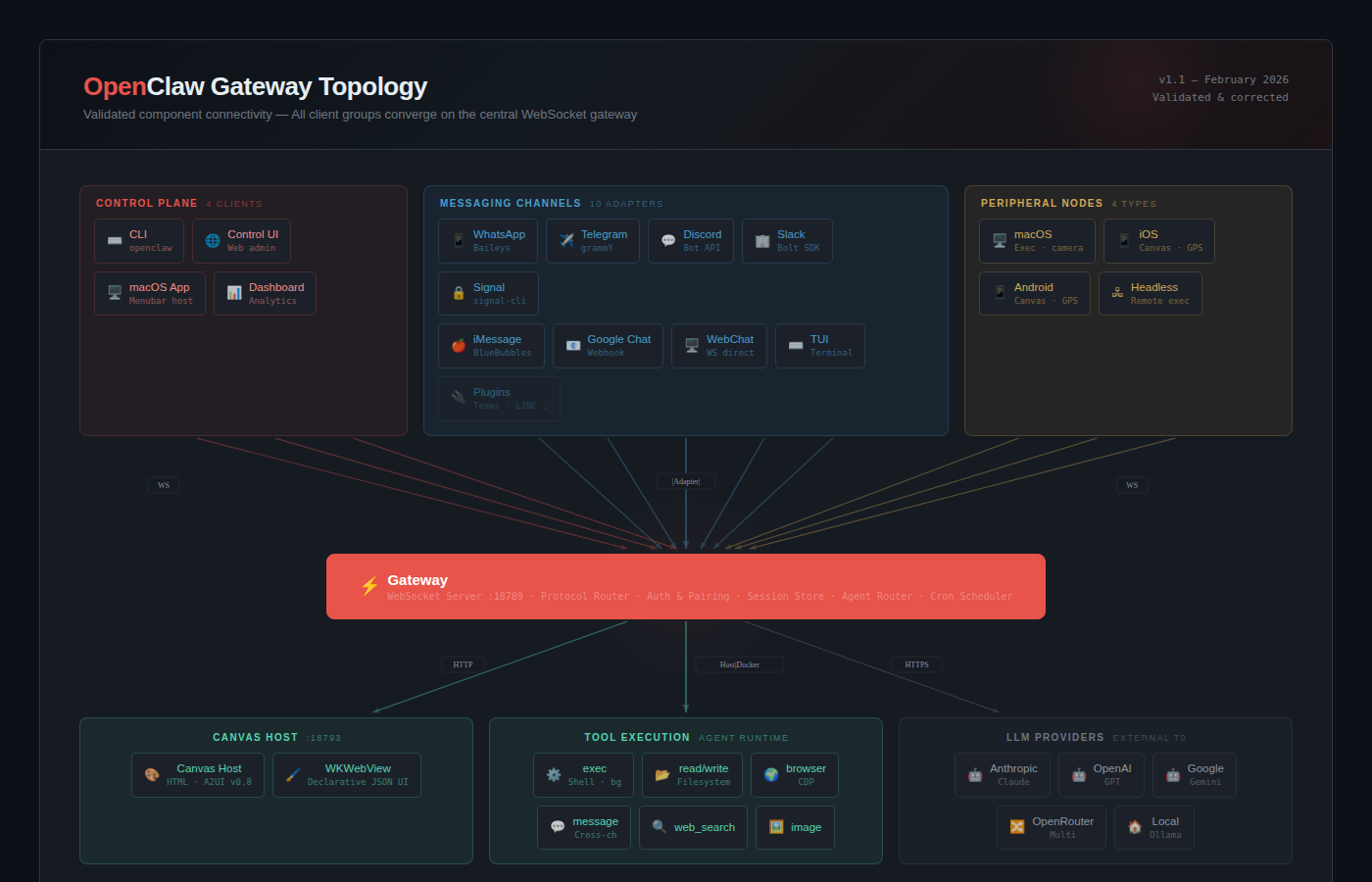

Project Feral is an independent security research initiative conducted by SecuraAI. It examines the security architecture of OpenClaw, an open-source, self-hosted AI assistant platform that enables autonomous agents to operate across messaging channels, execute tools, manage memory, and coordinate through a hub-and-spoke gateway architecture.

This research exists for two reasons: to advance the practical understanding of agentic AI security as a discipline, and to demonstrate how established threat modeling frameworks can be applied to a new class of autonomous systems.

This applies the CSA MAESTRO seven-layer reference architecture alongside the OWASP ASI Top 10 categorical risk taxonomy — MAESTRO provides the layered architectural decomposition (foundation models through agent ecosystems) while ASI provides the categorical risk taxonomy (goal hijack through rogue agents). Together they offer complementary coverage that neither framework achieves alone.

Project Details

Assessment Summary

Phase II — What's Next

Phase II will expand from architecture-level analysis to hands-on security testing. SecuraAI will maintain a dedicated fork of OpenClaw in an isolated research environment for controlled red team testing, vulnerability scanning, and defensive tooling development.

We're building this as a community research initiative. If you're a security researcher, AI/ML engineer, or practitioner interested in agentic AI security, we invite you to join the Phase II program — use the sign-up page in the Community section.

AI-Assisted Research Disclosure

This research was conducted with the assistance of AI-based analysis tooling. AI was used to accelerate analysis, generate structured outputs, and map findings across frameworks. All findings were reviewed, validated, and curated by human researchers.

Responsible Disclosure & Attribution

This research is published in good faith for the benefit of the open-source security community. It is not affiliated with, endorsed by, or sponsored by the OpenClaw project or its maintainers.

OpenClaw is released under the MIT License (Copyright © 2025 Peter Steinberger). This research constitutes analysis of software distributed under permissive open-source terms.